Deploy Dataristix™ into an Azure™ Container Group with Managed Identity

In this example, we deploy Dataristix into a Microsoft™ Azure Container Group, assign a User Managed identity, then use the Dataristix Connector for SQL Server™ to store some data into an Azure SQL Server database without configuring credentials on the client side.

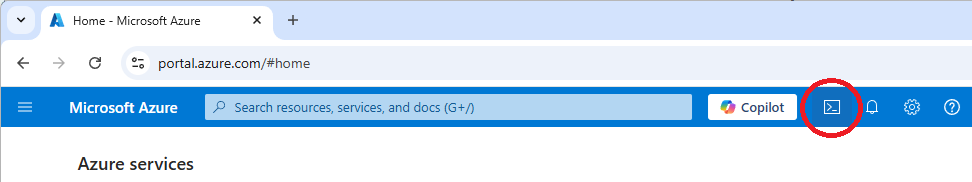

We use the Azure Cloud Shell and Terraform™ to setup the example. Terraform comes pre-installed with the Cloud Shell. Look for this icon in the Azure portal's title bar to launch Cloud Shell:

This example deploys an unsecured Dataristix instance as a container group. Adjust the example to secure the instance. For example, utilize a new or existing Application Gateway as a secure frontend. The deployment will incur costs for your Microsoft Azure subscription.

You can find the entire Terraform file at the end of this article. Upload the file into your Cloud Shell, then follow these steps.

- If you don't have a free or paid Docker account yet, then head over to Docker and create an account. This is necessary to avoid rate limits when downloading Dataristix container images from Docker Hub. Should you encounter a "409" error code when attempting to download container images, then you have probably encountered that limit. Create a Docker personal access token that at least allows reading of public container images and use it as the password.

- Setup environment variables for Terraform so that it can find the Docker credentials by running the following PowerShell commands in Cloud Shell:

$env:TF_VAR_DOCKER_USERNAME="<your username>"

$env:TF_VAR_DOCKER_PASSWORD="<your password>" - Review the Terraform file. Adjust the location of the resource group as required. You may also want to choose a unique name for your container group and other resources instead of "dataristix1".

# create Dataristix resource group resource "azurerm_resource_group" "dataristix1" {

name = "dataristix1"

location = "centralus"

} - Check that the file is parsed correctly by running command:

terraform plan

This should complete without errors and tell you wich resources are going to be created. Initially allocate vCPUs and memory generously to the Dataristix containers. Once your typical Dataristix workload is up and running, you may want to scale back resource requirements for each container based on observed usage. - If you are happy with the Terraform plan, then apply as follows:

terraform apply

After a few minutes, you'll have in your Microsoft Azure subscription:

- A new resource group called "dataristix1"

- A new storage account called "dataristix1datastorage"

- A new storage account called "dataristix1secretstorage"

- A new container group called "dataristix1_container_group"

- A new User Managed Identity called "dataristix1_user_assigned_identity", attached to your container group.

Review access to the storage accounts and restrict access as appropiate.

If you have not modified the example, then you will also have a public IP address attached to your container group and the Dataristix instance is publicly accessible on the Internet. At a minimum, browse to the Dataristix instance, add an Administrator user, and enable User Access Control. Secure your instance as soon as possible. Until then, all traffic from and to the instance is not encrypted. Use briefly for testing only!

To determine the public IP address of your container group, type "Container Instances" into the Azure portal's search bar and select "dataristix1_container_group". The public IP address is listed in the Overview. To browse, enter the IP address and port 8282 into your browser:

http://<container group ip address>:8282

Setting up SQL server

Now that the Dataristix instance is up and running, we'd like to connect to a SQL server database and store some data into it. We assume that you already have a suitable Azure SQL database available that is accessible by other Azure services; if not, then create a SQL Server and SQL Server database first.

Connect to your database with SQL Server Management Studio 20 or other suitable client, then create the container group user and grant permissions to the database using the following queries (adapt permissions to suit):

CREATE USER [dataristix1_user_assigned_identity] FROM EXTERNAL PROVIDER;

ALTER ROLE db_datareader ADD MEMBER [dataristix1_user_assigned_identity];

ALTER ROLE db_datawriter ADD MEMBER [dataristix1_user_assigned_identity];

ALTER ROLE db_ddladmin ADD MEMBER [dataristix1_user_assigned_identity];

Your Dataristix instance is now ready to exchange data with your database.

Setting up Dataristix

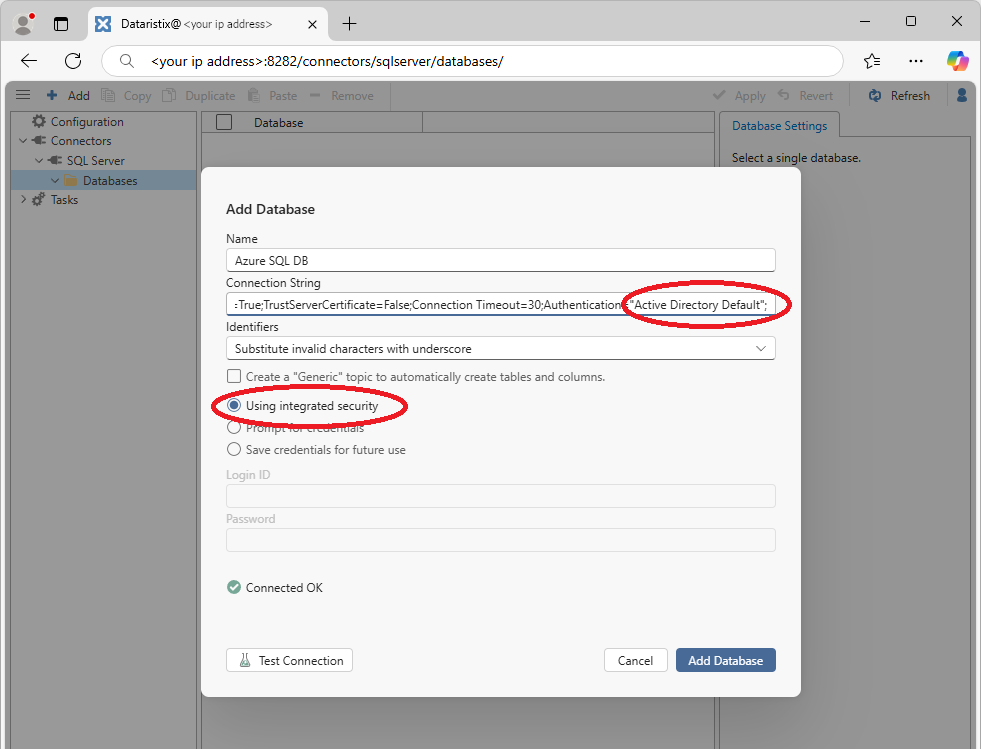

Browse to your instance, then add a database to the connector for SQL Server with a connection string like this (adapt for your SQL server name and SQL server database name):

Server=tcp:examplesqlserver.database.windows.net;Initial Catalog=examplesqldb;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;Authentication="Active Directory Default";

Note that "Authentication" is simply set to "Active Directory Default" and you can use "Integrated Security" without entering any credentials:

Test the connection, then proceed to configure database topics and tasks to send data to the database.

Add other Dataristix Connectors to the Terraform file

To add other connector modules to the Terraform file, copy the existing container block for the SQL Server connector, then reference container images of other connector modules instead of the "dataristix-for-sqlserver" image. If the added connector requires other ports to be open (for example, an MQTT broker port or OPC UA reverse-connect port), then expose those ports also.

Don't forget to add connector names to the list of connector modules in the "--modules" argument of the Dataristix Core container definition within the Terraform file.

Pin container image versions

The example Terraform file references the "latest" tag for container images. For production use, you may want to change this to a specific version.

Finally

To destroy everything that Terraform has created, run this command in your Cloud Shell:

terraform destroy

If you get prompted for Docker credentials, then the previous Cloud Shell session may have expired. You can cancel the Destroy action, configure the environment variables again as described earlier and try again, or enter credentials when prompted.

Finally, here is the entire Terraform file:

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~>3.0"

}

random = {

source = "hashicorp/random"

version = "~>3.0"

}

}

}

provider "azurerm" {

features {}

}

# Expect Docker credentials defined in environment variables.

# Setup credentials to avoid rate limits when downloading Dataristix container images.

# In Cloud Shell, set up the variables as follows:

# $env:TF_VAR_DOCKER_USERNAME=""

# $env:TF_VAR_DOCKER_PASSWORD=""

variable "DOCKER_USERNAME" {

type = string

}

variable "DOCKER_PASSWORD" {

type = string

}

# create Dataristix resource group

resource "azurerm_resource_group" "dataristix1" {

name = "dataristix1"

location = "centralus"

}

# create user defined identity for the Dataristix container group

resource "azurerm_user_assigned_identity" "dataristix1_user_assigned_identity" {

name = "dataristix1_user_assigned_identity"

resource_group_name = azurerm_resource_group.dataristix1.name

location = azurerm_resource_group.dataristix1.location

}

# create data storage volumes for the Dataristix instance

resource "azurerm_storage_account" "dataristix1datastorage" {

name = "dataristix1datastorage"

resource_group_name = azurerm_resource_group.dataristix1.name

location = azurerm_resource_group.dataristix1.location

account_tier = "Standard"

account_replication_type = "LRS"

}

# create secret storage volumes for the Dataristix instance

resource "azurerm_storage_account" "dataristix1secretstorage" {

name = "dataristix1secretstorage"

resource_group_name = azurerm_resource_group.dataristix1.name

location = azurerm_resource_group.dataristix1.location

account_tier = "Standard"

account_replication_type = "LRS"

}

# create shared storage folder "dataristix-data"

resource "azurerm_storage_share" "dataristix1-data-storage-share" {

name = "dataristix1-data-storage-share"

storage_account_name = azurerm_storage_account.dataristix1datastorage.name

quota = 5

}

# create shared storage folder "dataristix-secret"

resource "azurerm_storage_share" "dataristix1-secret-storage-share" {

name = "dataristix1-secret-storage-share"

storage_account_name = azurerm_storage_account.dataristix1secretstorage.name

quota = 5

}

# create container group

resource "azurerm_container_group" "dataristix1_container_group" {

name = "dataristix1_container_group"

location = azurerm_resource_group.dataristix1.location

resource_group_name = azurerm_resource_group.dataristix1.name

ip_address_type = "Public"

os_type = "Linux"

image_registry_credential {

username = var.DOCKER_USERNAME

password = var.DOCKER_PASSWORD

server = "index.docker.io"

}

# create user defined identity for the container group

identity {

type = "UserAssigned"

identity_ids = [azurerm_user_assigned_identity.dataristix1_user_assigned_identity.id]

}

exposed_port {

port = 8282

protocol = "TCP"

}

# Dataristix Proxy

container {

name = "dataristix1-proxy"

image = "index.docker.io/dataristix/dataristix-proxy:latest"

cpu = "0.5"

memory = "1.0"

# Ensure that Dataristix can find other containers in the group

environment_variables = {KUBERNETES_SERVICE_HOST = "1"}

ports {

port = 8282

protocol = "TCP"

}

volume {

name = "dataristix1-data-proxy"

mount_path = "/dataristix-data"

share_name = azurerm_storage_share.dataristix1-data-storage-share.name

storage_account_name = azurerm_storage_account.dataristix1datastorage.name

storage_account_key = azurerm_storage_account.dataristix1datastorage.primary_access_key

}

volume {

name = "dataristix1-secret-proxy"

mount_path = "/dataristix-secret"

share_name = azurerm_storage_share.dataristix1-secret-storage-share.name

storage_account_name = azurerm_storage_account.dataristix1secretstorage.name

storage_account_key = azurerm_storage_account.dataristix1secretstorage.primary_access_key

}

}

# Dataristix Core

container {

name = "dataristix1-core"

image = "index.docker.io/dataristix/dataristix-core:latest"

cpu = "0.5"

memory = "1.0"

# Ensure that Dataristix can find other containers in the group

environment_variables = {KUBERNETES_SERVICE_HOST = "1"}

# Define modules that Core is expected to find in the "modules" argument, separated by comma.

# Available modules include:

# CSV, E-Mail, Excel, Google Sheets, MySQL, MQTT, OPC UA, Oracle, PostgreSQL, Power BI, REST, Script, SQL Server, SQLite

# Ensure that the corressponding containers are configured as members of the container group.

commands = ["dotnet", "Rensen.Uaol.Core.Console.dll", "--pod", "--modules=\"SQL Server\""]

volume {

name = "dataristix1-data-core"

mount_path = "/dataristix-data"

share_name = azurerm_storage_share.dataristix1-data-storage-share.name

storage_account_name = azurerm_storage_account.dataristix1datastorage.name

storage_account_key = azurerm_storage_account.dataristix1datastorage.primary_access_key

}

volume {

name = "dataristix1-secret-core"

mount_path = "/dataristix-secret"

share_name = azurerm_storage_share.dataristix1-secret-storage-share.name

storage_account_name = azurerm_storage_account.dataristix1secretstorage.name

storage_account_key = azurerm_storage_account.dataristix1secretstorage.primary_access_key

}

}

# Dataristix Conector modules below; add more as required.

# Dataristix for SQL Server

container {

name = "dataristix1-for-sqlserver"

image = "index.docker.io/dataristix/dataristix-for-sqlserver:latest"

cpu = "0.5"

memory = "1.0"

# Ensure that Dataristix can find other containers in the group

environment_variables = {KUBERNETES_SERVICE_HOST = "1"}

volume {

name = "dataristix1-data-sqlserver"

mount_path = "/dataristix-data"

share_name = azurerm_storage_share.dataristix1-data-storage-share.name

storage_account_name = azurerm_storage_account.dataristix1datastorage.name

storage_account_key = azurerm_storage_account.dataristix1datastorage.primary_access_key

}

volume {

name = "dataristix1-secret-sqlserver"

mount_path = "/dataristix-secret"

share_name = azurerm_storage_share.dataristix1-secret-storage-share.name

storage_account_name = azurerm_storage_account.dataristix1secretstorage.name

storage_account_key = azurerm_storage_account.dataristix1secretstorage.primary_access_key

}

}

}

Questions or problems?

If you have any questions or problems, then please do not hesitate to contact us!

Dataristix is a trademark of Rensen Information Services Limited. Microsoft, Microsoft Azure, and SQL Server are trademarks of Microsoft Corporation. Terraform is a trademark of HashiCorp. All other product names, trademarks and registered trademarks are the property of their respective owners.